Roni Rosenfeld said he would never dream of using ChatGPT at work, but he understands how it could be tempting, and — yes — even useful for others. Maybe. Just don’t expect it to be accurate, uphold your company’s policies, or even do exactly what it’s told.

ChatGPT, an artificial intelligence search engine, is a product of OpenAI, and made its free, public debut last December. It can solve problems, write memos, produce creative writing and some claim it can even be employed by financial advisers to pick stocks.

But critics say it’s rife with errors, biases, and cultural tropes gleaned from the people that inspired it. AI, they say, could open companies to allegations of discrimination in employment practices and, rather than replace menial tasks, lead slackers to use it for jobs that require human creativity and oversight.

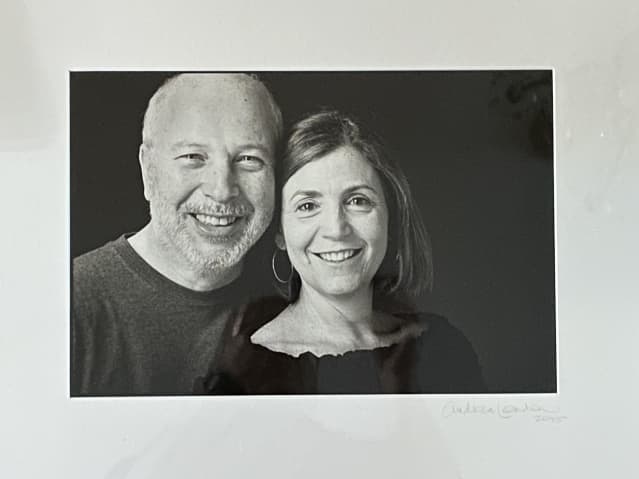

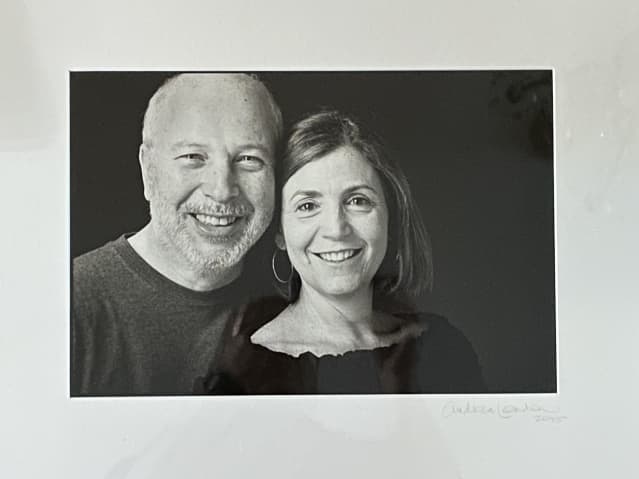

Rosenfeld, a professor of machine learning at Carnegie Mellon University, recently asked GPT4, a $20-a-month premium version of the AI software, to write a poem to his wife, a technology entrepreneur, to celebrate their wedding anniversary selecting from about 70 chosen words.

The words included: “Automobile. Good. Wedding. Groom. Relax. Bride. Vacation. Husband. Dress. Ocean.” On each attempt, he asked it to be more humorous, more whimsical, more forward-looking and even something “a little less cheesy,” among other instructions.

“‘It’s trained explicitly to appear helpful and informative, to give answers that people would like.’ ”

The AI platform spun into action. But GPT-4 at first used more than the words he suggested. He had to point out the errors, and ask it to abide by his original instructions. “It doesn’t own mistakes, but it admits them when you point them out,” Rosenfeld told MarketWatch.

It’s a chilling reminder that AI, in its latest incarnation as ChatGPT, is the online engineer who might cut corners; who takes inspiration from other people’s work’ and who can concoct a mince pie of suggestions and facts — and a combination of inaccurate aggregations, lies and fiction.

Rosenfeld employed AI to complete a task, but it took a lot of coaxing, even for a low-risk venture like a love poem. It proved to be a highly efficient slacker, not something or someone he would recommend as an honest candidate, even if it was a highly efficient one.

Earlier this month, Steven A. Schwartz, a lawyer with the firm Levidow, Levidow & Oberman, admitted to a Manhattan federal judge that he had used ChatGPT to compile a 10-page brief for a client who was suing an airline, alleging he was injured when he was struck by a metal serving cart during a flight.

The legal brief contained cases — Miller v. United Airlines, Petersen v. Iran Air and Varghese v. China Southern Airlines — that had no basis in reality, according to the New York Times, which first reported on the case. In fact, it contained half-a-dozen bogus cases. Schwartz reportedly asked ChatGPT if the cases were real and, despite the fictitious cases, it verified them.

Roni Rosenfeld and his wife.

In an affidavit, Schwartz told the judge that he “greatly regrets” using AI for legal research and “will never do so in the future without absolute verification of its authenticity.” The lawyer took full and sole responsibility for submitting false information.

ChatGPT is not the most reliable assistant. As Rosenfeld discovered: “It’s not trained to give correct answers. It’s trained explicitly to appear helpful and informative, to give answers that people would like. It’s not surprising that it’s become very good at being impressive.”

Not everyone agrees. MarketWatch columnist Peter Morici believes ChatGPT and its rivals will continue to evolve to a point where it will mimic many jobs. “Creating tools that can help lawyers draft briefs and programmers write code more quickly, automate aspects of white collar and managerial critical thinking and assist with elements of creative processes offers huge business opportunities.”

“It passed a tough undergraduate microbiology exam. and graduate law and business school exams from the Universities of Minnesota and Pennsylvania,” Morici recently wrote. “It’s been paired with the email program of a dyslexic businessman to assist with clearer communications that helped him land new sales.” He said it will free up people to do more nuanced and sophisticated tasks.

But even if it does work with increasing accuracy, should you rely on it? Use ChatGPT at work at your peril, experts warn, even if it’s for something as simple as writing or editing a memo, and even if the result is accurate. “It’s a laziness alarm,” said Aram Sinnreich, co-author of “The Secret Life of Data,” a nonfiction book he co-authored with Jesse Gilbert.

“‘It’s a crisis that people have very little faith in the authenticity or humanity of their professional field.’”

If you are using AI to write that report and meet your deadline? “It’s like waving a red flag,” Sinnreich told MarketWatch. “If a skilled, experienced professional uses AI to generate their professional output, it’s a sign that their field is stultified and repetitive. That’s a much bigger crisis.”

“It’s a crisis that people have very little faith in the authenticity or humanity of their professional field, that they don’t believe their labor is valuable,” Sinnreich added. “I would never use it because I care about what I’m saying.”

“The experience of researching and writing it is why I’m doing it, because I’m invested in the process and the product,” he said. “Using ChatGPT is, in itself, an epiphenomenon of bureaucracy and the commercialization of human expression.”

That’s a big statement, but he breaks it down, giving this example: a “phenomenon” is that fast-food menus are more unhealthy than, say, 30 years ago — according to some studies — but an “epiphenomenon” is that bad diets are associated with a higher risk of diabetes.

Some workers claim they’ve used ChatGPT on the down-low to organize data in an Excel-friendly manner or chat with their boss online. “All of these people are sneakily adopting ChatGPT in their jobs,” Sinnreich said. “They are focused on the product — not the process.”

“ChatGPT’s blandness can be an advantage, if you wish to remove emotion from an email or memo. ”

“They believe that their labor doesn’t matter, and they’re rubber-stamping the rhetoric of some higher authority and feeling alienated by their jobs,” he said. “It’s almost a cry for help. It’s a sign that their fields are not making them feel valid or valuable or personally engaged.”

Others say there’s room for using ChatGPT and similar AI technology at work — in very limited circumstances. Luis A.N. Amaral, a professor at Northwestern University in Evanston, Ill., who led the data science initiative at the university, sees some benefits.

“As the accuracy of AI gets better and better, it will become progressively harder for people to avoid the temptation to rely on it, with rare but inevitable bad consequences,” he said. What’s more, there is obviously not just one kind of AI. ChatGPT is a specific form of AI called “Generative AI”, or GAI, which emerged very recently, and which relies on Large Language Models (LLMs), Amaral said.

Older forms of AI have been with us for several decades, including recommendation systems, face recognition, and candidate ranking, etc. “These older forms of AI don’t have the ‘look and feel’ of human interaction, and don’t create the impression of truly human-like intelligence,” he said.

ChatGPT’s blandness can be an advantage, if you wish to remove emotion from an email or memo. If you have problems putting words down on paper, if you can’t view a situation objectively, or if you are afraid that you could come off as defensive, you could, in theory, use it to write a friendly, non-threatening email to your boss, Amaral added. But proceed with caution.

Here’s a “fake” letter to a manager filled with grievances:

To whom it may concern:

I’ve been an employee of this company who was worked tirelessly and without complaint in the face of rising costs and dwindling resources for five years, and during that time I have done my utmost to be the best financial adviser I could be for our clients, even when those clients were difficult and downright rude to me. I have often wanted to tell them where to go, but I have bitten my tongue, and told myself that to be the bigger person.

However, I feel like my current salary does not reflect my seniority or all the extra hours I have put in without being asked. If you passed this office building at night and saw a light on, chances are that would be me. I would like to meet with you to discuss my progress. At this point, I am at my wit’s end. I hope we can resolve this satisfactorily. If you want to know the kind of pressure we are put under here, ask Helen in Accounting. If she sang like a canary, we’d all listen.

While neither this version nor the ChatGPT version (below) is exactly perfect, the latter version followed several attempts by ChatGPT to reword the letter in a nicer way — with instructions to remove any hint of resentment, self-pity, rude words and, finally, any mention of other colleagues.

Here’s an edited version of ChatGPT’s attempt:

To whom it may concern:

I have been dedicated to serving this company for five years, consistently demonstrating hard work and resilience in the face of various challenges. Throughout my tenure, my primary focus has been providing exceptional financial advice to our clients, regardless of any difficult interactions or rude behavior directed towards me. Despite occasional moments of frustration, I have always maintained a professional demeanor.

However, I believe that my current salary does not adequately reflect my level of experience or the additional hours I have willingly invested in my work. Often, I find myself working late into the night. I kindly request a meeting with you to discuss my professional progress and compensation. I am more than willing to provide insight from my own experiences. Together, we can explore ways to address these pressures and find a mutually satisfactory resolution.

Amaral does not recommend using AI to write, rewrite or edit anything more perfunctory than a work memo, in the same way you would use a spellcheck, and even then he recommends proceeding with caution. Using it for a resignation letter is a no-no. As is an email requesting a pay raise (like the one above).

“You shouldn’t expect it to understand anything, or to produce anything that is reliable or truthful, because it is just picking up stuff at random,” he said. Like an autonomous car, it may be correct some or most of the time, but if you allow it full rein (or to reign over you) expect accidents.

“‘The consequences of asking these stochastic parrots to take on important functions are pretty bad.’”

Should a financial adviser use AI to pick stocks? “No! You should not do that,” Amaral said. “You’re throwing dice on which stocks to buy. It’s not going to be aggregating reliable information, and it’s not going to create documents that have an understanding of the world.”

If you’re a counselor, should you use ChatGPT to provide advice? Or, for that matter, an advice column? Or how about scientific research? His answer is no, no, and no. “The consequences of asking these stochastic parrots to take on important functions are pretty bad,” Amaral said.

There is one other big risk of using AI at work or anywhere for that matter — from human resources choosing candidates for a job or a boss outlining his mission statement to a sales representative refining their pitch: the internet is swimming in conscious and unconscious bias.

AI operates like social-media algorithms on steroids, feeding your information and misinformation supplied by an imperfect human race. “These systems are fundamentally biased because of the underlying politics of machine learning,” Sinnreich said.

“Therefore, swapping AI generation for human expression creates reinforced biases in reiterative ways and amplifies them,” he added. “It’s like a feedback loop, like putting a microphone next to an amplifier. One single note drowns out everything else.”

“A little bias quickly grows into a huge bias in the same way that a little sound turns into a huge screech. I’ve had AI write different kinds of stories about different ethnicities and boy, oh, boy do those stories turn out differently,” he said. “The algorithms create an ugly funhouse mirror.”

Our biases get fed back into the system, and create even bigger biases. In fact, Sinnreich said that social-media algorithms and computer-generated polling are already being used in politics, advertising and consumer research. If you’re not using it at work, others already are.

“We are entering an era when any mediated communication is going to be presumptively AI-generated,” he said. “The whole world is becoming Captcha. You identify a staircase so it gets better at generating fake staircases. ChatGPT fills in the gaps in genuine expertise with credible bullshit, and it’s not going to stop anytime soon.”

This brings us back to that New York lawyer’s apology in a Manhattan courtroom last Thursday for using AI to create a 10-page legal brief. “The court is presented with an unprecedented circumstance,” Manhattan federal Judge P. Kevin Castel wrote in a court document on May 4. Schwartz now faces a court hearing on June 8 to discuss possible sanctions.